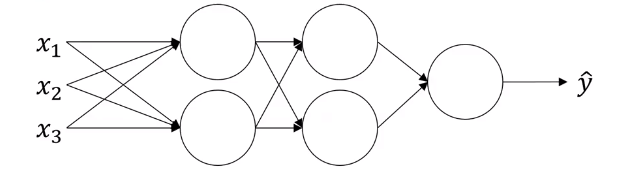

For deep neural network, here is how batch normalization is implemented.

and parameters are:

then compute and update parameters

Note that these is nothing to do with for momentum

This can be easily implementated with NN framework. For example with tensorflow, you can use tf.nn.batch_normalization

Ioffe, Sergey and Christian Szegedy. “Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift.” ICML (2015).

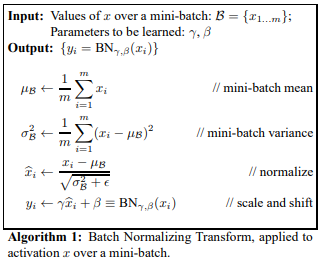

Similarly, batch normalization can be applied to mini-batch as follows:

and parameters are

Notice that is computed as , and batch norm will look at the mini-batch and normalize to first of mean 0 and standard variance, and then a rescale by and . It means that whatever is the value of is actually going to just get subtracted out, because during that batch norm step, you are going to compute the means of the and subtract the mean. So adding any constant to all of the examples in the mini-batch won’t change anything. Therefore, the parameters are

and you will compute

Since the shape of and is () so the shape of and is also ().

Assuming we are using mini-batch gradient descent:

for number of mini-batch

This also works with gradient descent with momentum, or RMSprop, or Adam. Where instead of taking this gradient descent update, mini-batch you could use the updates given by these other algorithms.