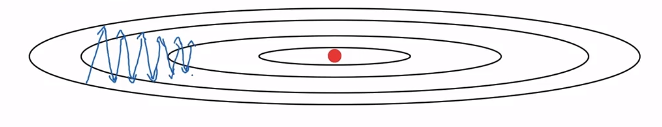

Red position represents the minimum.

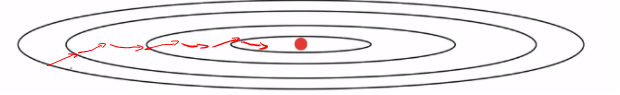

If you implement gradient descent, you can end up with huge oscillations in the vertical direction, even while it’s trying to make progress in the horizontal direction

There’s another algorithm called RMSprop, which stands for root mean square prop, that can also speed up gradient descent.

Red position represents the minimum.

If you implement gradient descent, you can end up with huge oscillations in the vertical direction, even while it’s trying to make progress in the horizontal direction

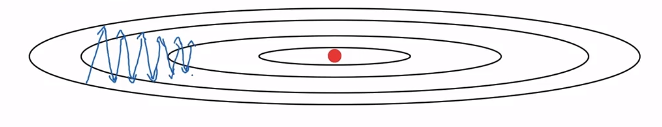

Assume we have vertically and horizontally, we want to learn slowly and to lean quicker.

On iteration

Compute on current mini-batch

small

large

Then update parameters:

IN practice you add a small something like

Because the function is sloped much more steeply in the vertical direction than as in the direction so, will be relatively large. So will relatively large, whereas compared to that will be smaller. So the net effect of this is that your updates in the vertical direction are divided by a much larger number, and so that helps damp out the oscillations. Whereas the updates in the horizontal direction are divided by a smaller number. So the net impact of using RMSprop is that your updates will end up looking more like the red line in below figure.