| Human | Algorithm | At fault? | ||

|---|---|---|---|---|

| Jane visits Agrica in September | Jane visited Africa last September | B | ||

| R | ||||

| R | ||||

| R | ||||

| B | ||||

| R |

B for beam search, R is for the RNN.One of the challenges of machine translation, is that given a sentence in a language there are one or more possible good translation in another language. So how do we evaluate our results?

The way we do this is by using BLEU score. BLEU stands for bilingual evaluation understudy.

The intuition is: as long as the machine-generated translation is pretty close to any of the references provided by humans, then it will get a high BLEU score.

Let’s take an example:

BLEU score on bigrams

| Source | Output |

|---|---|

| Input X | “Le chat est sur le tapis.” |

| Y1 | “The cat is on the mat.” |

| Y2 | “There is a cat on the mat.” |

| Machine | “the cat the cat on the mat.” |

The bigrams in the machine output:

| Pairs | Count | Count_{clip} |

|---|---|---|

| the cat | 2 | 1 (Y1) |

| cat the | 1 | 0 |

| cat on | 1 | 1 (Y2) |

| on the | 1 | 1 (Y1) |

| the mat | 1 | 1 (Y1) |

| Totals | 6 | 4 |

Count = How many times the each of bigram appers

Count Clip = the maximum number of times that that bigram appears in either Y1 or Y2

Modified precision = sum(Count clip) / sum(Count) = 4/6

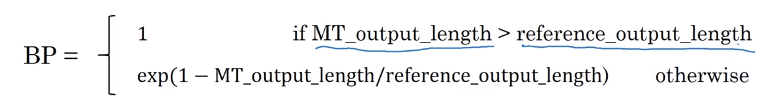

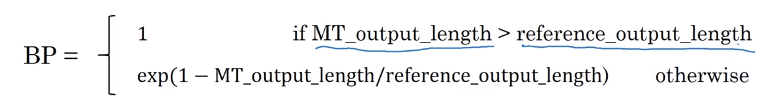

So here are the equations for modified precision for the n-grams case: