GloVe is another algorithm for learning the word embedding. It’s the simplest of them.

This is not used as much as word2vec or skip-gram models, but it has some enthusiasts because of its simplicity.

GloVe stands for Global vectors for word representation.

Let’s use our previous example: “I want a glass of orange juice to go along with my cereal”.

We will choose a context and a target from the choices we have mentioned in the previous sections.

Then we will calculate this for every pair: Xct = # times t appears in context of c

Xct = Xtc if we choose a window pair, but they will not equal if we choose the previous words for example. In GloVe they use a window which means they are equal

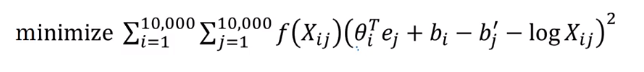

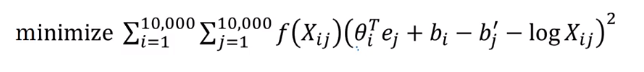

The model is defined like this:

and plays a role of and

is weighting term and if it is 0 then

f(x) - the weighting term, used for many reasons which include:

log(0) problem, which might occur if there are no pairs for the given target and context values.Theta and e are symmetric which helps getting the final word embedding.

Conclusions on word embeddings: