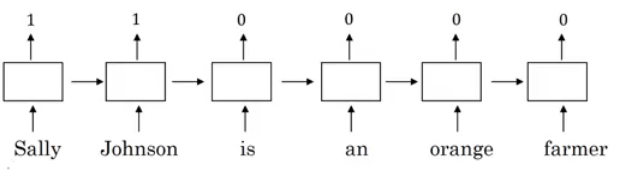

- An image example would be:

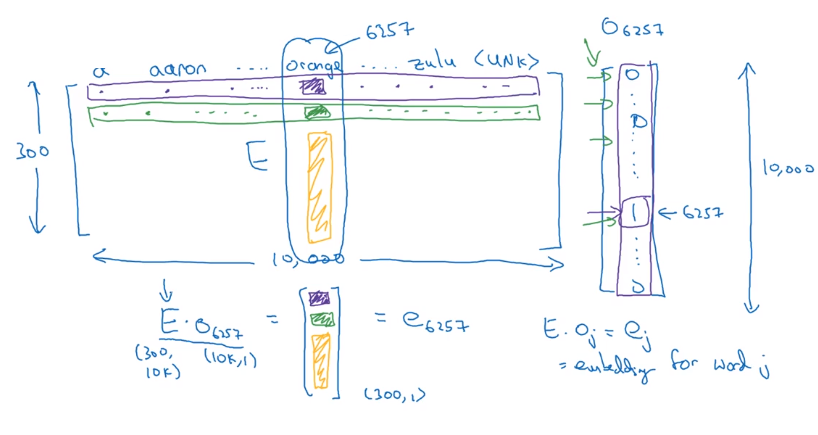

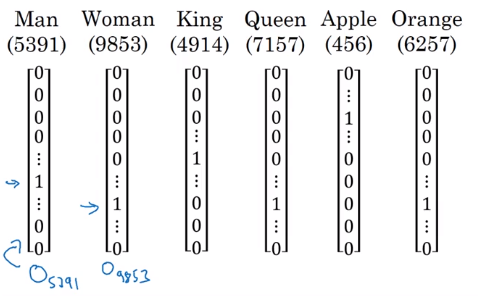

- We will use the annotation O idx for any word that is represented with one-hot like in the image.

- One of the weaknesses of this representation is that it treats a word as a thing that itself and it doesn’t allow an algorithm to generalize across words.

- For example: “I want a glass of orange ______”, a model should predict the next word as juice.

- A similar example “I want a glass of apple ______”, a model won’t easily predict juice here if it wasn’t trained on it. And if so the two examples aren’t related although orange and apple are similar.

- Inner product between any one-hot encoding vector is zero. Also, the distances between them are the same.

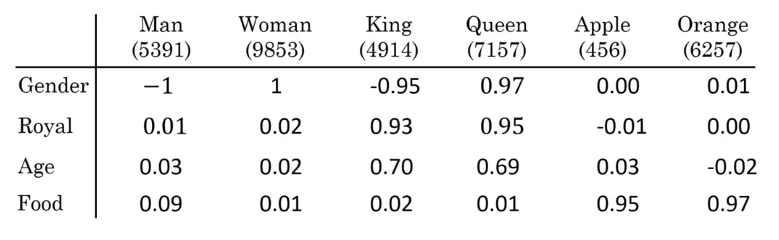

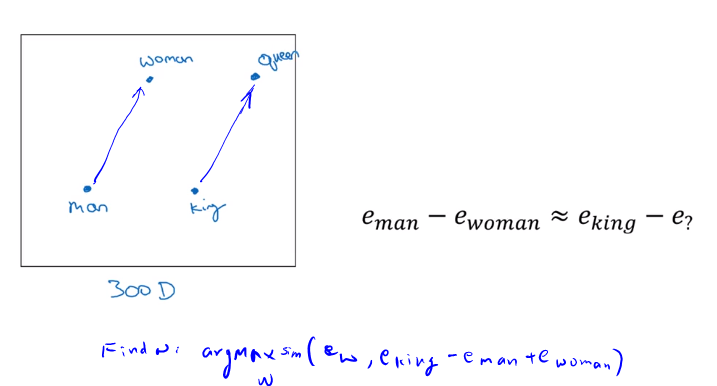

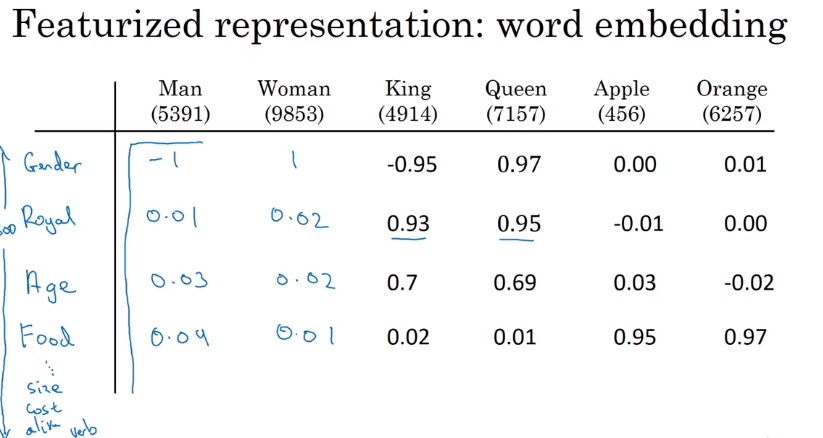

- Each word will have a, for example, 300 features with a type of float point number.

- Each word column will be a 300-dimensional vector which will be the representation.

- We will use the notation e5391 to describe man word features vector.

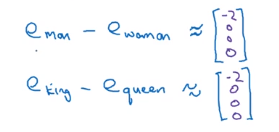

- Now, if we return to the examples we described again:

- “I want a glass of orange ______”

- I want a glass of apple ______

- Orange and apple now share a lot of similar features which makes it easier for an algorithm to generalize between them.

- We call this representation Word embeddings.

- You will get a sense that more related words are closer to each other.