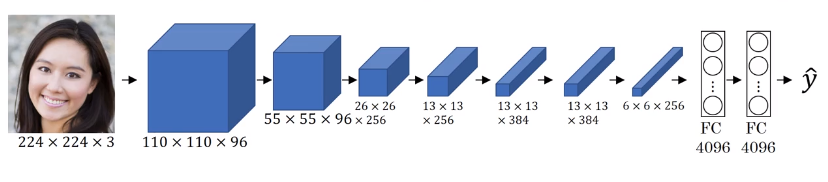

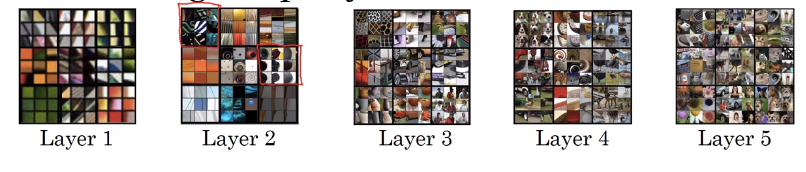

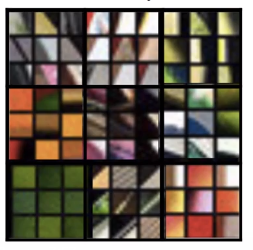

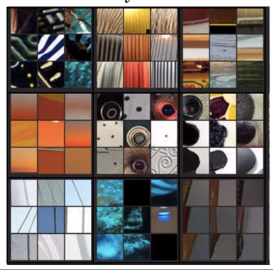

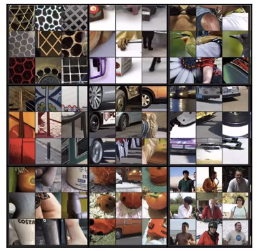

As Zeiler & Fergus. (2013) demonstrated, the earlier layers of a ConvNet tend to detect lower-level features such as edges and simple textures, and the later (deeper) layers tend to detect higher-level features such as more complex textures as well as object classes.

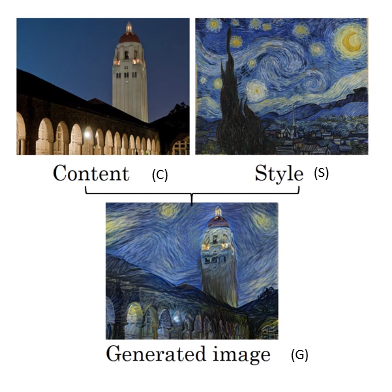

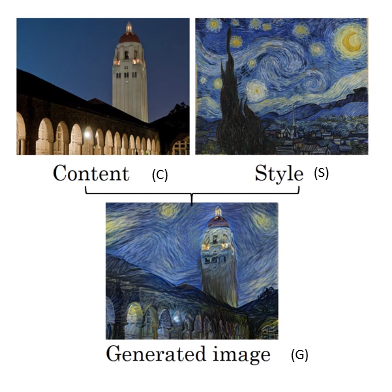

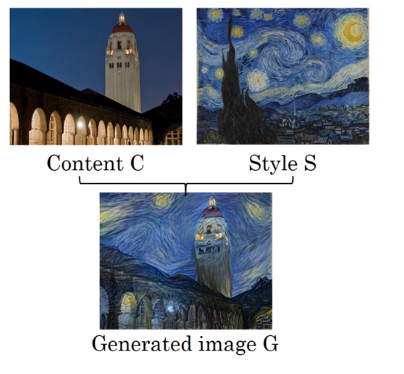

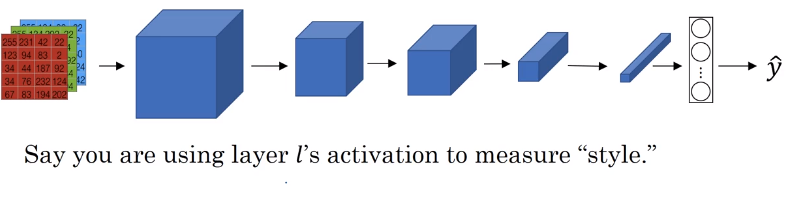

The “generated” image G should have similar content as the input image C. Suppose some layer’s activations is chosen to represent the content of an image. In practice, the most visually pleasing results come from a layer in the middle of the network–neither too shallow nor too deep.

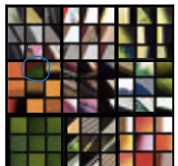

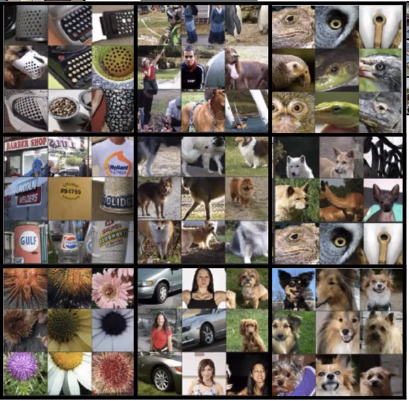

Pick a unit in layer 1. Find the nine image patches that maximize the unit’s activation.

In other words, pause your training set through your neural network, and figure out what is the image that maximizes that particular unit’s activation. Now, notice that a hidden unit in layer 1, will see only a relatively small portion of the neural network. And so if you visualize, if you plot what activated unit’s activation, it makes sense to plot just a small image patches, because all of the image that that particular unit sees. So if you pick one hidden unit and find the nine input images that maximizes that unit’s activation, you might find nine image patches like this.

Pick a unit in layer 1. Find the nine image patches that maximize the unit’s activation.

In other words, pause your training set through your neural network, and figure out what is the image that maximizes that particular unit’s activation. Now, notice that a hidden unit in layer 1, will see only a relatively small portion of the neural network. And so if you visualize, if you plot what activated unit’s activation, it makes sense to plot just a small image patches, because all of the image that that particular unit sees. So if you pick one hidden unit and find the nine input images that maximizes that unit’s activation, you might find nine image patches like this.

So looks like that in the lower region of an image that this particular hidden unit sees, it’s looking for an egde or a line that looks like that. So those are the nine image patches that maximally activate one hidden unit’s activation.

Repeat for other units.

measures how similar the content are between C and G. measures how similar the style are between S and G.

After defining the cost,

Suppose one particular hidden layer was selected and set the image C as the input to the pretrained VGG network, and run forward propagation. Let be the hidden layer activations in the layer that was chosen. (The superscript is dropped from to simplify the notation. Usually is the mid layer of CovNets) This will be a tensor. Repeat this process with the image G: Set G as the input, and run forward progation. Let be the corresponding hidden layer activation. We will define as the content cost function as:

can be or something else.

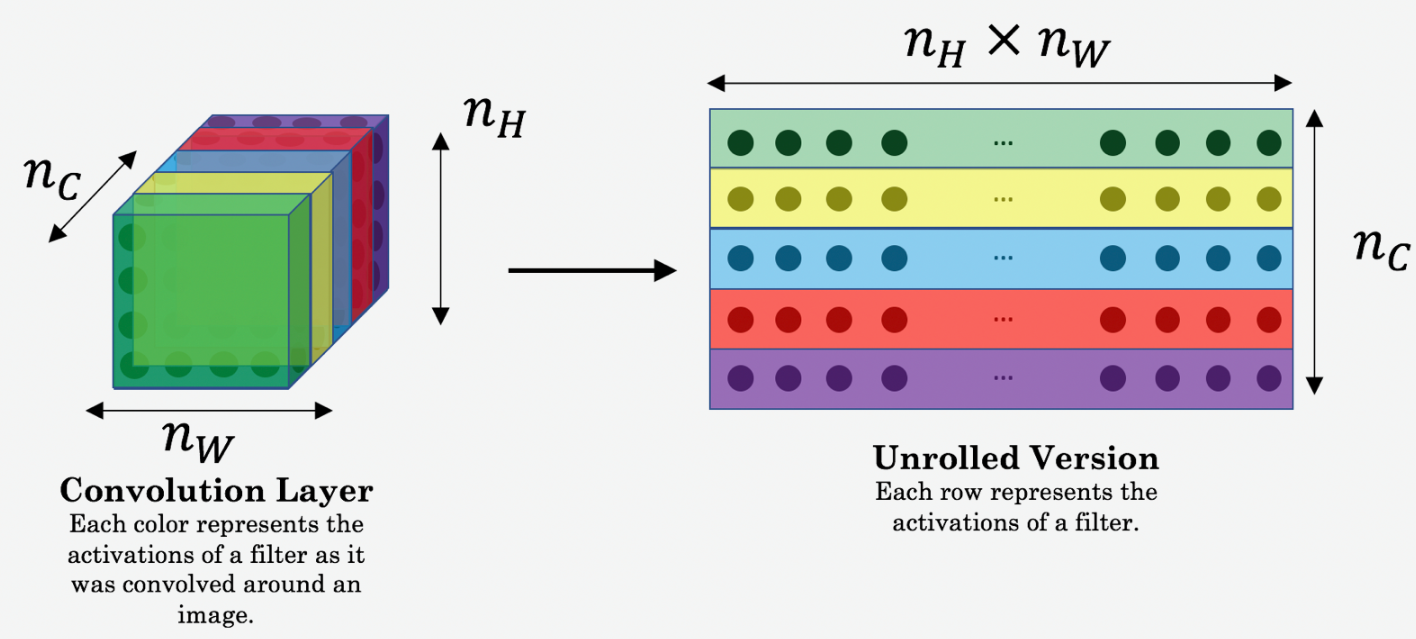

Here, and are the height, width and number of channels of the hidden layer you have chosen, and appear in a normalization term in the cost. For clarity, note that and are the volumes corresponding to a hidden layer’s activations. In order to compute the cost , it might also be convenient to unroll these 3D volumes into a 2D matrix, as shown below. (Technically this unrolling step isn’t needed to compute , but it will be good practice for when you do need to carry out a similar operation later for computing the style const .)

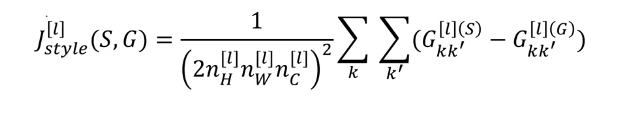

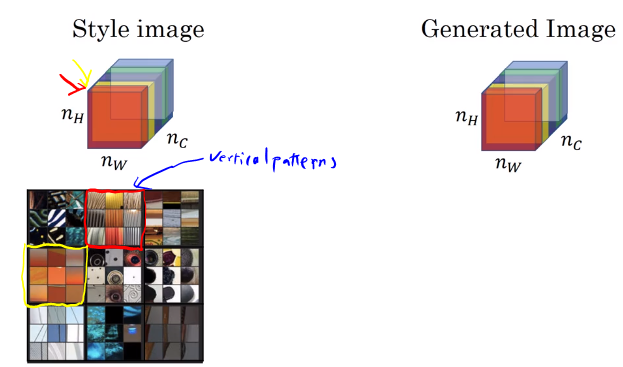

After generating the Style matrix (Gram matrix), our goal will be to minimize the distance between the Gram matrix of the “style” image S and that of the “generated” image G. For now, we are using only a single hidden layer , and the corresponding style cost for this layer is defined as:

where and are respectively the Gram matrices of the “style” image and the “generated” image, computed using the hidden layer activations for a particular hidden layer in the network.

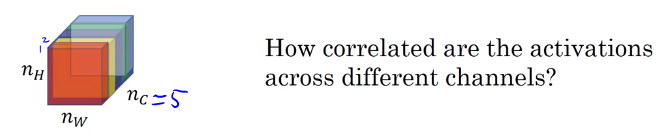

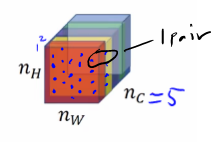

Intuitively, if we use layer 's activation to measure style, define style as correlation between activations across channels.

For example, the figure above has 5 channel. First, you take the first two channels and see how correlated (unnormalized cross covariates) are activation in these, so that gives a pair of numbers.

The unnormalized cross covariates tells you which of these high level texture components tend to occur or not occur together in part of an image and that’s the degree of correlation that gives you one way of measuring how often these different high level features, such as vertical texture or this orange tint or other things as well, how often they occur and how often they occur together and don’t occur together in different parts of an image.

If we use the degree of correlation between channels as a measure of the style, then what you can do is measure the degree to which in your generated image, this first channel is correlated or uncorrelated with the second channel and that will tell you in the generated image how often this type of vertical texture occurs or doesn’t occur with this orange-ish tint and this gives you a measure of how similar is the style of the generated image to the style of the input style image.

Let activation at . is

For style image: where

For generated image: where

The style matrix is also called a “Gram matrix.” In linear algebra, the Gram matrix G of a set of vectors is the matrix of dot products, whose entries are . In other words, compares how similar is to : If they are highly similar, you would expect them to have a large dot product, and thus for to be large.

Note that there is an unfortunate collision in the variable names used here. We are following common terminology used in the literature, but is used to denote the Style matrix (or Gram matrix) as well as to denote the generated image .

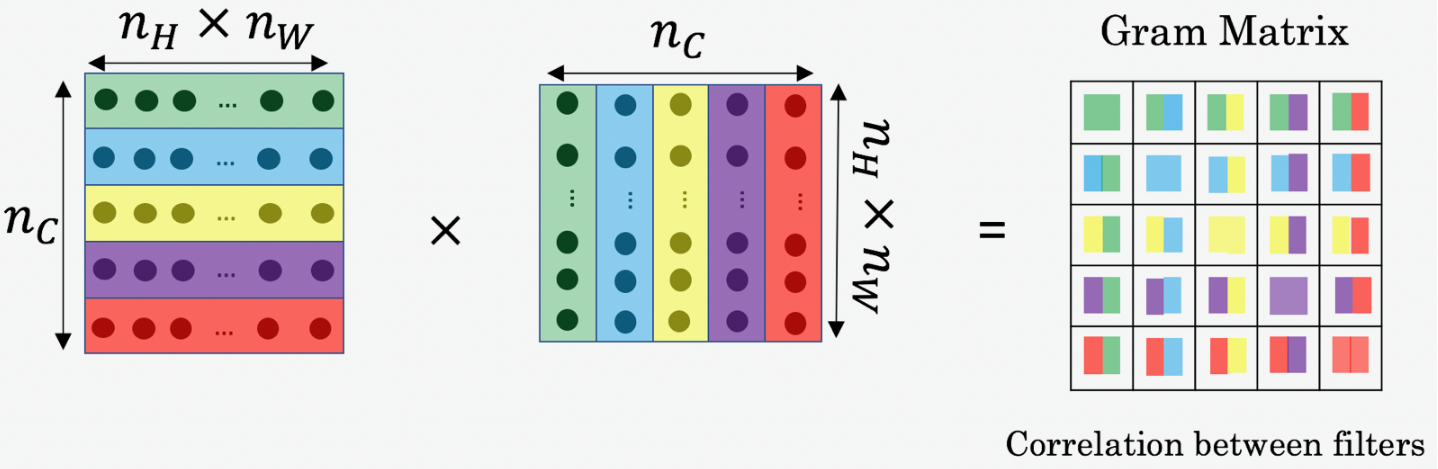

The Style matrix can be computed by multiplying the “unrolled” filter matrix with their transpose in NST:

The result is a matrix of dimension where is the number of filters. The value measures how similar the activations of filter are to the activations of filter .

One important part of the gram matrix is that the diagonal elements such as also measures how active filter is. For example, suppose filter is detecting vertical textures in the image. Then measures how common vertical textures are in the image as a whole: If is large, this means that the image has a lot of vertical texture.

By capturing the prevalence of different types of features (), as well as how much different features occur together (), the Style matrix measures the style of an image. The gram matrix of A is .

Zeiler & Fergus. (2013). Visualizing and Understanding Convolutional Networks, arXiv 1311.2901 Leon A. Gatys, Alexander S. Ecker, Matthias Bethge. (2015). A Neural Algorithm of Artistic Style, arXiv:1508.06576