In transfer learning, you have a sequential process where you learn from task A and then transfer that to task B. In multi-task learning, you start off simultaneously, trying to have one neural network do several things at the same time. And then each of these task helps hopefully all of the other task.

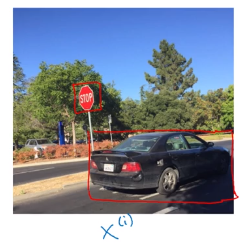

Self driving tasks need to detect many objects .

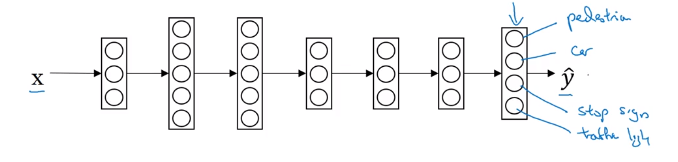

Let’s say we want to detect 4 kinds of objects, then is (4,1) vector, and

is matrix.

To train the NN, define loss function. The loss averaged over your entire training set would be 1 over m sum from i = 1 through m, sum from j = 1 through 4 of the losses of the individual predictions.

where

** logistic loss**

Unlike softmax regression: one image can have multiple label (for each iamge, does it have a car, does it have stop sign?)

With this setting, one image can have multiple labels. If you train a neural network to minimize this cost function, you are carrying out multi-task learning. Because what you’re doing is building a single neural network that is looking at each image and basically solving four problems.

Multi-task training still works even the labels are not fully available.

In this case, sum only over value of j with 0 or 1. could be for input with fully labeled with 0 or 1. if only one is labeled.