It’s not always easy to combine all the things you care about into a single row number evaluation metric. Sometimes it is useful to set up satisficing as well as optimizing matrix.

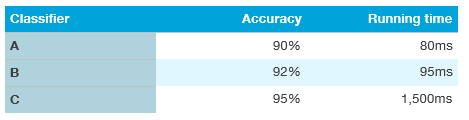

Suppose you care about both the classification accuracy and the running time of a learning algorithm. You need to choose from these three classifiers:

It seems unnatural to derive a single metric by putting accuracy and running time into a single formula, such as: cost = Accuracy - 0.5*RunningTime

But maybe it seems a bit artificial to combine accuracy and running time using a formula like this, like a linear weighted sum of these two things.

Here’s what you can do instead:

More generally, if you have

N metrics: use 1 metric for optimizing, use N-1 for satisficing metrics

As a final example, suppose you are building a hardware device that uses a microphone to listen for the user saying a particular “wakeword” or “trogger words”, that then causes the system to wake up. Examples include Amazon Echo listening for “Alexa”; Apple Siri listening for “Hey Siri”; Android listening for “Okay Google”; and Baidu apps listening for “你好百度” You care about both the false positive rate—the frequency with which the system wakes up even when no one said the wakeword—as well as the accuracy rate—how often it wake up when someone says the wakeword. One reasonable goal for the performance of this system is to maximize the accuracy rate (optimizing metric), subject to there being no more than one false positive every 24 hours of operation (satisficing metric). Once your team is aligned on the evaluation metric to optimize, they will be able to make faster progress.