and are computed on entire mini batch, but during the test time, you won’t have the same size of mini-batch. You need some different way of coming up with mu and sigma squared. In order to apply your neural network and test time is to come up with some separate estimate of and .

During the test time, and are estimated using exponentially weighted average across the mini-batches.

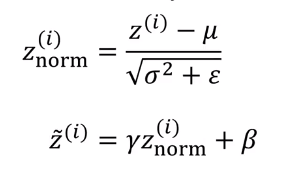

Let’s pick a layer and

Just as we saw how to use a exponentially weighted average to compute the mean of when you were trying to compute a exponentially weighted average of the current temperature, you would do that to keep track of what’s the latest average value of this mean vector you’ve seen. So that exponentially weighted average becomes your estimate for what the mean of the Zs is for that hidden layer and similarly, you use an exponentially weighted average to keep track of these values of that you see on the first mini batch in that layer, sigma square that you see on second mini batch and so on.

During test time, you use

But in practice, what people usually do is implement and exponentially weighted average where you just keep track of the and values you’re seeing during training and use and exponentially the weighted average, also sometimes called the running average, to just get a rough estimate of and and then you use those values of and that test time to do the scale and you need the head and unit values .