A deep learning algorithm requires tuning of following possible set of Hyperparameters

First important:

Second important

Third important

Forth important

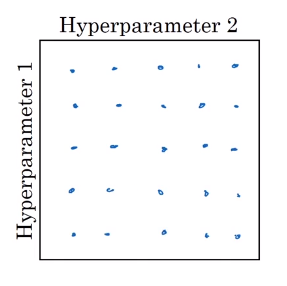

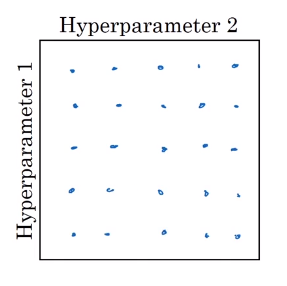

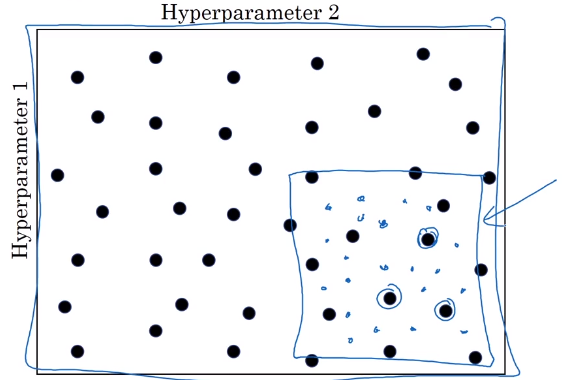

Impose a grid on possible space of a hyperparameter and then go over each cell of grid one by one and evaluate your model against values from that cell. Grid method tends to vast resources in trying out parameter values which would not make sense at all.

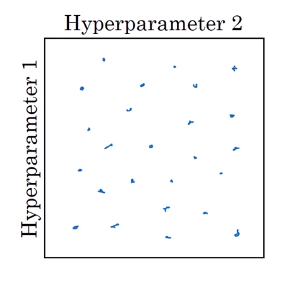

In random method, we have high probability of finding good set of params quickly. After doing random sampling for a while, we can zoom into the area indicative of good set of params - coarse to fine search

Sampling at random doesn’t mean sampling uniformly at random, over the range of valid values. Instead, it’s important to pick the appropriate scale on which to explore the hyperparamaters.

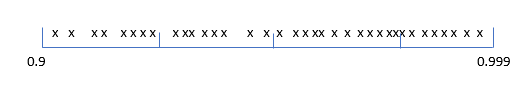

Let’s go to an example where sampling uniformly at random over the range of

It is reasonable to pick random numbers from this line between 50 and 100.

Another example could be choosing a number of layers from L: 2-4

In this case, the grid search is still reasonable. [2, 3, 4]

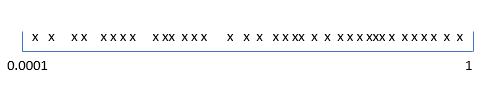

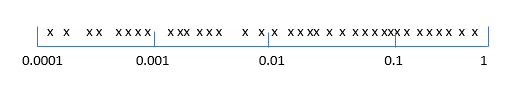

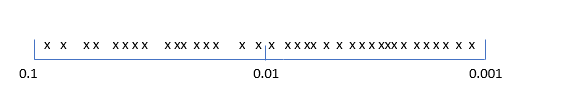

Sometimes, uniformly random sample values are not appropriate. For example, choosing random numbers from learning rate:

If we use uniformly random sample values from 0.0001 to 0.1 then most of the values are between 0.1 and 1, so this is not correct.

We could sample random numbers on log-scale.

In Python,

r=-4*np.random.rand() learning_rate=10**r

r and learning_rate=

Another example where uniformly random sample values are not appropriate is a choosing for expomemtially weighted averages.

This is the range of values we want to use.

It’s not appropriate to take the random numbers from uniformly distributed random numbers.

Since we can take random numbers from log scales

Deep learning is being used in many different areas - NLP, vision, logistics, ads, etc. We may not transfer hyperparameter tuning from one area to another. Therefore, we should perhaps not get locked with our intuition and rather consider to reevaluate the intuition.

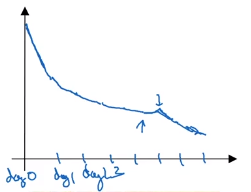

Babsitting works well when we do not have enough computational power or capacity to train a lot of models at once. We patiently measure our models performance over time and tune hyperparameter based its performance of pervious day.

This is like Panda strategy - pandasat babysitting one baby panda at a time so that it does well eventually.

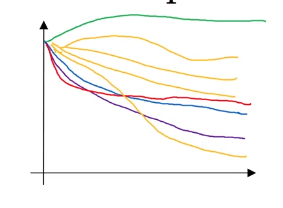

We train many models in parallel, each against different hyperparameter settings. Potentially, these models will generate different learning curves. At end we will pick the model with best learning curve. This approach is known as Caviar strategy.