When you implement back propagation you’ll find that there’s a test called creating checking that can really help you make sure that your implementation of back prop is correct.

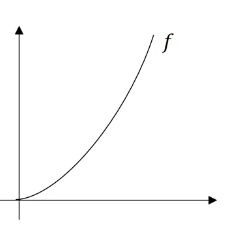

Instead of just nudging theta to the right to get theta plus epsilon (), we’re going to nudge it to the right and nudge it to the left to get theta minus epsilon (). is 1.01 and is 0.99 when =0.01.

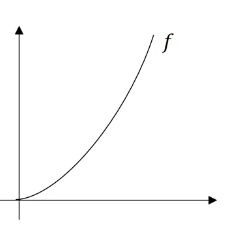

It turns out that rather than taking a small triangle from to and computing the height over the width, you can get a much better estimate of the gradient if you take a larger triangle from to and compute the height over width of this bigger triangle.

The height of the triangle is The wideth of the triangle is , so

Since so the above approximation is pretty close