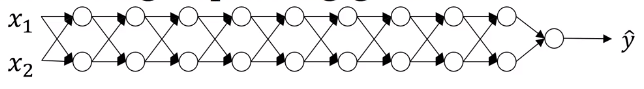

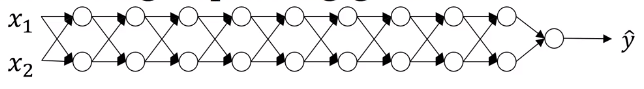

One of the problems of training neural network, especially very deep neural networks, is data vanishing and exploding gradients. What that means is that when we’re training a very deep network our derivatives or our slopes can sometimes get either very, very big or very, very small, maybe even exponentially small, and this makes training difficult.

Assume linear activation and . In this case,

since

Let’s assume,

then

so,

is essentially .

If is large then is also large.

Conversely, when

then

is essentially .

If is large then is very small.

If Identity Matrix, then with a very deep network the activations can explode

If Identity Matrix, then with a very deep network the activations will decrease exponentially

To solve this problem, careful selection of parameter initialization is required.