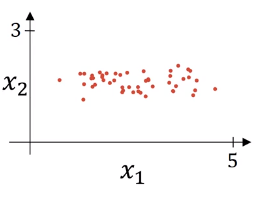

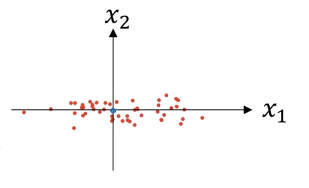

Training set with two input features

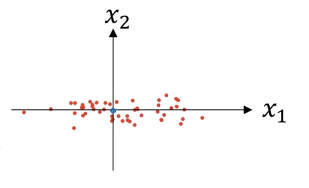

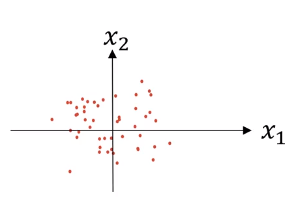

Normalizing involves two steps:

- Subtract Mean ,

Notice here that the feature has a much larger variance than the feature here

Notice here that the feature has a much larger variance than the feature here - Normalize variance ,

- Use same to normalize test set

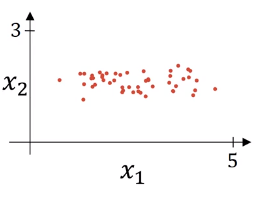

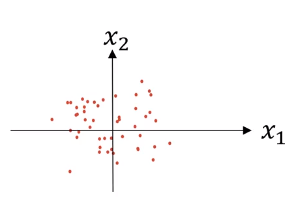

Training set with two input features

Normalizing involves two steps:

Notice here that the feature has a much larger variance than the feature here

Notice here that the feature has a much larger variance than the feature here

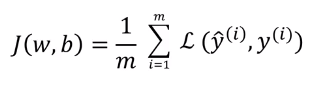

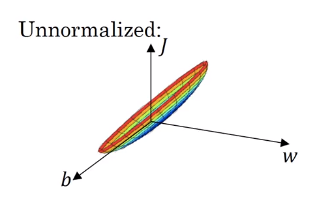

If unnormalized, cost function would look like below.

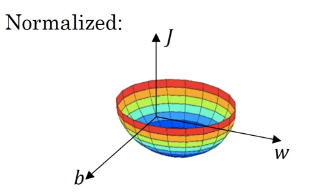

If normalized, cost function would look like more symetrical.

If you’re running gradient descent on the cost function on unnormalized, then you might have to use a very small learning rate because the gradient descent might need a lot of steps to oscillate back and forth before it finally finds its way to the minimum. Whereas if you have a more spherical contours in normalized, then wherever you start gradient descent can pretty much go straight to the minimum.