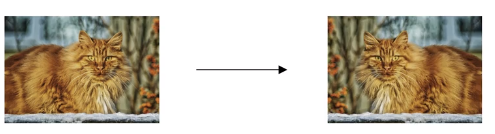

Getting more training data can be expensive. Flipping the image horizontally and add that to the training set doble the size of the training set. This strategy is not as good as get more new training data.

You could also rotate it randomly and zoon in/out.

More examples of data augmentation

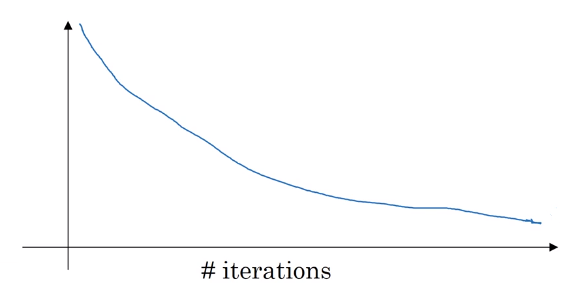

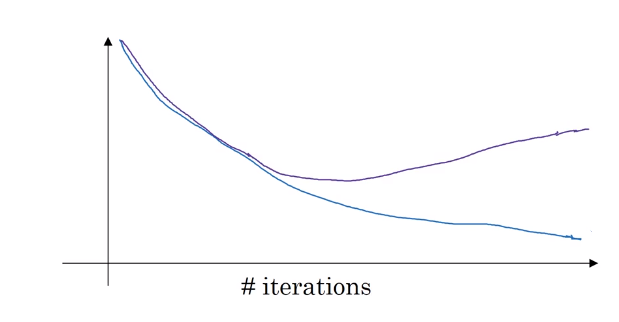

Data augmentation is another method of regularization to reduce the variance.