When you change your neural network, it’s important to initialize the weights randomly. For logistic regression, it was okay to initialize the weights to zero. But for a neural network of initialize the weights to parameters to all zero and then applied gradient descent, it won’t work.

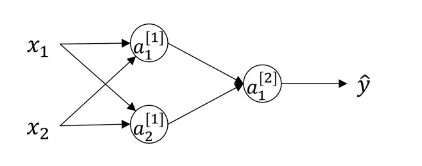

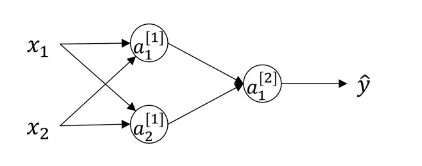

So, is a (2,2) matrix and initialize it as and . Initializing to 0 is a problem, but initializing to 0 is ok. If is 0, then because both of these hidden units are computing exactly the same function. Furthermore, in the backward propagation, and .

So when a weight gets updated, and after every iteration, will have the first row equal to the second row. So it’s possible to construct a proof by induction that if you initialize all the ways, all the values of w to 0, then because both hidden units start off computing the same function. And both hidden units have the same influence on the output unit, then after one iteration, that same statement is still true, the two hidden units are still symmetric. And therefore, by induction, after two iterations, three iterations and so on, no matter how long you train your neural network, both hidden units are still computing exactly the same function. And so in this case, there’s really no point to having more than one hidden unit.

Solution to this problem is to use a small random numbers for initializing parameters.

numpy.random.randn(d0, d1, …, dn) creates an array of specified shape and fills it with random values as per standard normal distribution.

W1=np.random.randn((2,2))*0.01 b1=np.zeros((2,1)) W2=np.random.randn((1,2))*0.01 b2=0

Similarly, for , you’re going to initialize that randomly. And , you can initialize that to 0.

because if is large, then is also large, and is also large so the learning rate speed becomes very slow. Constant of 0.01 should be okay for sharrow neural network.